Artificial Neural Network or Ann is a deep learning technique to help machines learn from data by mimicking the human brain. Let’s think about our brains for a moment. How do we detect an object? For example, how do we know a thing is an Orange and not an apple? We see an apple and our eyes scan the features of the target object (apple in this case). Then, our brain starts to process these features by some neurons, telling us that the output is an apple.

Ann works very similarly to the brain. It has three main layers of decision-making:

1- Input layer: This is the layer of features. The one that the brain gets from our eyes.

2- Hidden layer(s): These are the internal hidden layer in our brain that process the input features. This layer’s job is to identify the key characteristics of the input features that help the brain to make a better decision.

3- Output layer: This is the layer that actually makes the decision. It tells us we are watching an apple.

In this post, we want to do a classification task using Ann in python.

Note: I am not explaining what is Ann in this post since there are many resources for Ann concept. Here you can find the intuition and the code.

Here is the link to the full notebook: https://www.kaggle.com/code/oladazimi/ann-classification-acc-90-to-95

Task and Data

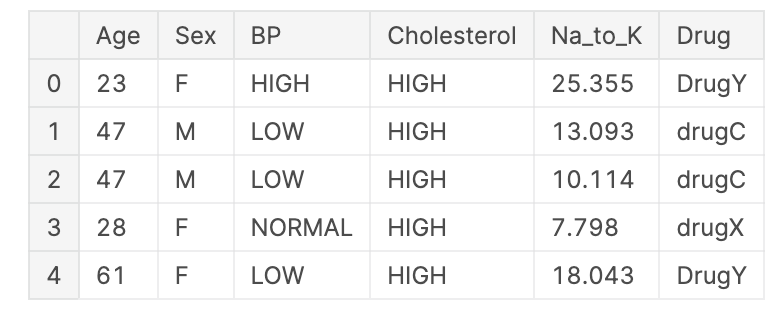

The task here is to classify some patients to the drug that they need based on these features:

- Age

- Sex

- Blood Pressure Levels (BP)

- Cholesterol Levels

- Na to Potassium Ration

You can find this data here: https://www.kaggle.com/datasets/prathamtripathi/drug-classification

The outputs are five drugs.

First, we load the data:

import pandas as pd

data = pd.read_csv("/kaggle/input/drug-classification/drug200.csv")

data.head()

Then, we separate the features and the output (drug) columns:

X = data.iloc[:, :-1].values

y = data.iloc[:, -1].valuesPreparation for Ann

Before jumping to Ann, we need to prepare data for it in a way that the algorithm understands. These steps for preparation are almost the same for any other classification algorithm.

The first step is Encoding. We need to transfer our categorical data to numeric values. First, we start with the Sex column. Since it is binary, we can use the LabelEncoder:

from sklearn.preprocessing import LabelEncoder

le = LabelEncoder()

X[:, 1] = le.fit_transform(X[:, 1])Then, we need to encode Blood Pressure Levels (BP) and Cholesterol Levels. We use OneHotEncoder and ColumnTransformer to encode:

from sklearn.compose import ColumnTransformer

from sklearn.preprocessing import OneHotEncoder

import numpy as np

ct = ColumnTransformer(transformers=[('encoder', OneHotEncoder(), [2, 3])], remainder='passthrough')

X = np.array(ct.fit_transform(X))The last encode is for the output column (Drug). Since we have five drug types, we do not use LabelEncoder since the target data column is not binary. Therefore we use OneHotEncoder:

encoder = OneHotEncoder()

encoded_Y = encoder.fit(y.reshape(-1,1))

y = encoded_Y.transform(y.reshape(-1,1)).toarray()Now that we encoded everything, we need to split them to train and test datasets:

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)The last step is Scaling which means we standardize the data values to be almost between -3 and 3:

from sklearn.preprocessing import StandardScaler

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.transform(X_test)Note: We always need Scaling for any task with Ann.

ANN

Now that everything is ready, we can implement our network. To do this we use the keras library in tensorflow:

https://www.tensorflow.org/api_docs/python/tf/keras

First, we initiate our Ann:

import tensorflow as tf

ann = tf.keras.models.Sequential()As we discussed, the input layer in Ann are our features. So we need to set some hidden layers and the output layer. We use two hidden layers for our network in this task. We also need to set an activation function.

What is the activation function? This is the function that indicates a neuron state (on/off) in Ann's brain.

There are many activation functions for different purposes. In this task, we use the ReLU function. https://en.wikipedia.org/wiki/Rectifier_(neural_networks)

ann.add(tf.keras.layers.Dense(units=12, activation='relu'))

ann.add(tf.keras.layers.Dense(units=12, activation='relu'))Note: The number of units is set for this task. You can tune it.

The next step is to set the output layer. It is exactly like the hidden layers except that:

- We need a different activation function since our output is not binary.

- The number of units is equal to the number of drug types (five in this case)

number_of_possible_outcomes = len(set(data["Drug"]))

ann.add(tf.keras.layers.Dense(units=number_of_possible_outcomes, activation='softmax'))POINT: Here we used the softmax activation function. This function is proper when we are dealing with a multi-class classification. https://en.wikipedia.org/wiki/Softmax_function

Note: If you are dealing with a binary-class classification, you can use ReLU for the output layer too.

The next step is to compile our network to build it. The compile function needs three arguments: optimizer, loss function, and metrics.

ann.compile(optimizer='adam', loss='categorical_crossentropy', metrics=['accuracy'])What are the optimizer, loss function, and metrics? Ann is a graph. Like every other graph, there are many paths between two nodes (input and output nodes). So the question is: how can Ann decide what is the most optimum and correct path? These three arguments are for answering the above question. The first two are helping Ann to find the optimum path, and the last one is helping Ann to decide about the path correctness.

Note: If you are dealing with a binary-class classification, replace the loss function with binary_crossentropy.

The last step is to train Ann:

ann.fit(X_train, y_train, batch_size=32, epochs=100)What is batch_size? For performance sake, we do not run the Ann for all sample train data at once. We divide the data into smaller sets. Batch_size is the small set size we use. What is epoch? We do not run Ann only once to train. We need to run it multiple times in most cases. Epoch indicates how many times the Ann runs on the train data.

Note: Think about epoch and batch_size as two nested loops on the train data. The first loop is the epoch and the inner loop is batch_size.

Prediction and accuracy

At last, we can use our trained Ann to predict the test data and then we evaluate the accuracy of Ann’s prediction.

y_pred = ann.predict(X_test)To calculate the accuracy score, first, we need to extract the prediction from the Ann prediction result:

y_pred_outcome = []

for output in y_pred:

output_list = [0] * number_of_possible_outcomes

output_list[np.where(output == max(output))[0][0]] = 1

y_pred_outcome.append(output_list)Then, we check the accuracy:

from sklearn.metrics import accuracy_score

accuracy_score(y_test, y_pred_outcome)Final and important Note: The accuracy of your Ann may change on each run. The reason is that the Ann we have now has many random decisions in it. If you like to always get the same result, you can set a seed in python for all random decisions. Read this for a better explanation:

https://stackoverflow.com/questions/58241065/result-changes-every-time-i-run-neural-network-code

The End.